Optimising Machine Learning Inference with Intel’s OpenVino

Continuing the R&D previously completed on online inference for computer vision, we have collaborated with Intel and benchmarked Intel’s OpenVINO, a toolkit for high-performance deep learning inference.

Optimising Machine Learning pipelines is a key component of our work at Datatonic. Through our partnership with Intel, we have started to outline some guidelines around defining the optimal hardware on GCP for some ML workflows. We have built hardware benchmarks on Google Cloud Platform for training and batch inference for the recommender system of a Top-5 UK retailer and online inference for the computer vision model for Lush Cosmetics. The results of this internal R&D work can be viewed in the following blog posts:

- Accelerate Machine Learning on Google Cloud with Intel Xeon processors

- Machine Learning Optimisation: What is the Best Hardware on GCP?

The Optimisation Challenge: Minimise Latency, Maximize Throughput

I am sure you will find these two metrics mentioned in other blog posts about ML Inference Optimisation.

But why are these two metrics so important?

It’s about user-machine interaction

Imagine developing a computer vision mobile application to recognise different types of shoes. You are working on releasing a new version of the Machine Learning model in the cloud after you have spent the last 3 months trying to improve the accuracy of it.

Finally, the time has come to publish your model to serve real users!

The following week, you discover that the initial feedback from the users is not as positive as expected: Users are complaining that the detection process is slow and sluggish, making the overall user experience unpleasant.

In this case, like in many ML use cases, latency plays a fundamental role in the interaction between the user and the ML model.

It’s about reducing costs

In a scenario where latency is not critical, we may want to optimise the throughput (defined in terms of the number of successful requests processed per second (RPS)) to serve as many requests and users as possible.

In other words, we are interested in processing the highest amount of requests possible per second with the least amount of CPU required.

For example, take a streaming analytics pipeline where an ML model is used to segment users on the fly. This pipeline will receive events from various sources, it will process them to finally send this data to a Data Warehouse.

Thus, our primary goal for the pipeline is to be able to process as many events as possible in the same amount of time with the same infrastructure. The reason for this is mainly related to cost: being able to process all the incoming events in 1 hour instead of 2 hours when using the same infrastructure, can reduce the cost by 50%.

The optimisation of the ML inference will always be a fundamental part of the ML journey. Many developers and companies released frameworks to optimise these performances. Across the field, Intel has produced an exceptional example of this work.

Because of their deep expertise in hardware level optimisation, Intel released various toolkits and specialised hardware optimises every part of the ML journey.

Introducing OpenVINO

To continue our research in the topic of ML Inference optimisation, in collaboration with Intel we wanted to evaluate & benchmark OpenVINO, Intel’s opensource toolkit for high-performance deep learning inference.

OpenVINO supports optimisaation of Neural Networks developed with the most common Deep learning framework, allowing for optimised deep learning inference across multiple Intel platforms and with edge devices.

OpenVINO consists of mainly 3 parts:

- Model Optimiser: a command-line tool for importing, converting and optimising ML models developed with the most popular deep learning framework such as Tensorflow, Caffe, Pytorch, MxNet.

- Inference Engine: a unified API for high-performance inference on multiple Intel Hardwares. The inference engine can be used independently or with the OpenVINO model Server, a docker container capable of hosting OpenVINO models for high-performance inference.

- A set of other tools for supporting the creation and optimisation of ML models such as Open Model Zoo which consists of a repository with pre-trained models, Post-Training Optimisation tool which is a tool for model quantisation and Accuracy checker – a tool to check the accuracy of the different ML models.

Benchmark Architecture

Following the R&D previously done on Inference optimisation, we’ve decided to use the model built for LUSH cosmetics as a Computer Vision model to be trialled in OpenVINO using various CPU families in Google Cloud Platform.

We’ve also decided to benchmark OpenVINO against the most popular framework for ML inference: Tensorflow Serving. For the benchmark, we are using OpenVINO v.2020 Release 3 and Tensorflow Serving 2.2.0.

For both frameworks, we aimed to obtain the best performances tuning the Servers parameters. When doing the throughput oriented test, in TF Serving we activated the batching mode.

All the client requests were sent from Locust, a performance testing tool, deployed to Google Kubernetes engine.

For the benchmark, we evaluated OpenVINO and TFServing sending GRPC requests.

For each version of the Lush model, in this case, OpenVINO and Tensorflow, we’ve also created a quantised version of it, where the model’s weights are compressed from a float32 to an int8 representation.

We created 2 different benchmark tests:

- Latency oriented test, where the main metric is median latency (ms). In this test, we are going to simulate 1 user sending 1 request at a time.

- Throughput oriented test, where the main metric is sRPS (successful Requests Per Second). In this test we simulated up to 100 users, each one sending 1 request at a time.

In addition, the costs for both tests are also recorded. The main metric here is cost per 1 million requests.

Below is a graph of the benchmark architecture used for this R&D project.

The Hardware

We wanted to test the efficacy and effectiveness of OpenVINO and TF Serving to serve the Lush image detection model in a Kubernetes cluster, using all the machine types available in GCP. Three of these machine types are offered by Intel:

- Intel Xeon Scalable Processor Cascade Lake: available with the GCP machine type “n2”, with base frequency at 2.8 GHz, and with up to 3.4 GHz in sustained all-core-turbo

- Intel Xeon Scalable Processor Compute-optimised Cascade Lake: available with the compute-optimised version “c2” in GCP with base frequency equal 3.1 GHz with up to 3.8 GHz in sustained all-core turbo

- Intel Xeon Scalable Processor Skylake: available with the GCP machine type “N1”, with base frequency at 2 GHz with up to 2.7 GHz in sustained all-core turbo

The final machine chosen is the AMD GCP machine type “N2D”, 2nd Gen of AMD EPYC™ Processors with a base frequency of 2.25 GHz, an effective frequency of 2.7 GHz, and a max boost frequency of 3.3 GHz.

For the benchmark, each of these machines were chosen to have 8 vCPUs.

We have 4 different types of models, where every model is tested in 4 different machine types in GCP. In total, the Server-side consists of 16 different endpoints available.

Results

Overall, the results of our benchmarks show that OpenVINO is up to 4 times more performant than Tensorflow Serving.

These results are consistent for both Latency and Throughput tests and directly affect the costs for a million requests.

Our benchmark shows that the best performances in OpenVINO are obtained with Intel CPUs and in particular the most recent CPU Family, Cascade Lake.

For both OpenVINO and Tensorflow Serving, we’ve noticed a benefit gained by the quantisation process of the Machine Learning Model.

For both benchmarks, we have found really interesting the fact that when looking only at Tensorflow Serving we have that the Skylake CPU is the worst in terms of performance whilst the AMD CPU is the best when instead we look at the results with OpenVINO we have that the AMD CPU is the worst CPU.

This confirms the amazing work made by Intel in optimising OpenVINO for their CPU platforms.

Latency Oriented Benchmark

Here you can see the results of the latency-oriented benchmark. When looking at latencies, it is important to remember that the lower the latency, the more desirable it is.

From this chart, we can see that OpenVINO strongly outperforms TF Serving by at least 4x on every machine. Overall the c2-standard-8 machine, Intel’s compute-optimised Cascade Lake, offers the best performance. The quantized model optimised by OpenVINO is consistently the fastest, offering an impressive 4ms of latency on the c2-standard-8 machine and outperforming TF Serving quantized by over 4x.

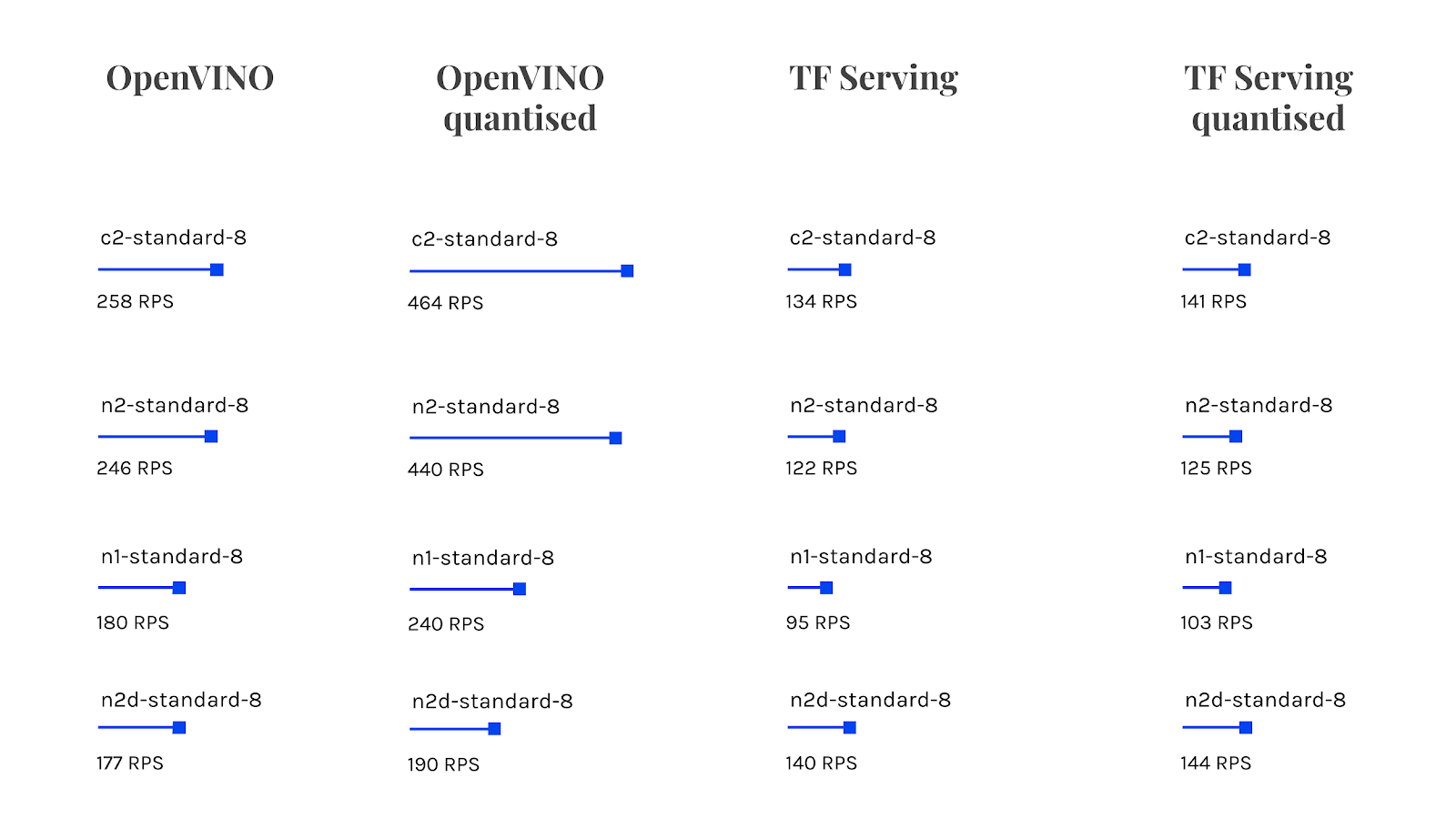

Throughput Oriented Benchmark

Here you can see the results of the throughput-oriented benchmark. Unlike latency, the higher the throughput the better it is.

Once again OpenVINO is truly impressive, outperforming TF Serving on every machine. The quantized OpenVINO model achieves a staggering 464 requests per second on the c2-standard-8 machine. In the case of OpenVINO, the throughput particularly benefits from quantisation, with up to 55% more throughput than the normal model.

Cost Comparison

As a metric for the comparison, we are using the cost of serving 1 million requests.

We define the cost of serving 1 million latency-oriented requests, as:

Cost per 1M request (c/r) = Latency (seconds/request) * Cost per Second (c/s) * 1M

We instead define the cost of serving 1 million throughput-oriented requests, as:

Cost per 1M request (c/r) = 1/ ( Max throughput (requests/s) / Cost per Second (c/s)) * 1M

From this chart, we can notice that the cost calculations for both solutions are directly affected by the benchmark results. Like for the performance benchmark, the adoption of OpenVINO is able to reduce costs up to 4 times.

Something we’ve found interesting when doing this calculation is the fact that the most cost-effective solution for latency it’s using the compute optimised version of Intel Cascade Lake, of course, this calculation is done assuming a scenario where the rate of requests sent is constant, it does not take into account the fact that in a real scenario there are moments where the ML Server is idle.

Update: in partnership with Intel we continued to optimise the Tensorflow Serving setup.

We made 4 changes in the setup of the Server:

- Use the Intel Optimised Tensorflow Serving MKL Image

- Use the new Tensorflow version 2.4

- Enabled TF_ENABLE_MKL_NATIVE_FORMAT option in the DockerFile

- Optimised the inference graph with Tensorflow’s toolkit for inference optimisation

These changes allowed Tensorflow Serving to have similar results with OpenVINO. You can find below the updated results comparing Tensorflow Serving with OpenVINO for the non-quantized Lush model.

Lush’s Feedback

After the impressive result, we contacted Lush Cosmetics for feedback. Here is what they had to say:

“We are pleased with the research carried out by Datatonic using the Product Classification Computer Vision model in our Lush Lens app as a benchmark. Our model runs on mobile at the moment, but we would consider moving to 2nd Gen Intel Xeon Scalable Processors in the future as the best hardware solution for new applications of our model on Google Cloud.”

Simon Ince – Tech Research & Development Lead at Lush Cosmetics

Where to use OpenVINO?

Our results clearly show the performance benefit in using OpenVINO against TF Serving for a deep learning use case.

We are really impressed by these results and by Intel’s work, for that we can definitely recommend OpenVINO for any Deep Learning solution, especially for use cases where the Inference is particularly compute-intensive such as Image, Audio or Video Detection.

The presence of the OpenModel Zoo as a repository of pre-trained models, the possibility to quantise the model through model calibration with an infinite number of settings available, and the possibility to use one model server for models trained in any popular deep learning framework, make this toolkit complete and ready to be used in a production environment.

As Google Cloud Machine Learning Specialization Partner of the Year, Datatonic has a proven track record in developing cutting-edge solutions in AI, Machine Learning and Cloud Modernization. Driven by technical excellence in Data Science and in-depth industry experience, we’ve delivered AI and Machine Learning powered solutions for top-tier clients globally.

Want to know how to design and develop hyper-personalized experiences? Download our three-step guide, “How to be Hyper-Relevant“, partnering with Intel.