Machine Learning Optimisation: What is the Best Hardware on GCP?

Optimisation of Machine Learning pipelines and hardware is a key component of our work here at Datatonic.

A Machine Learning pipeline is generally defined as a series of iterative steps ranging from data acquisition and feature engineering to model training, serving and versioning. Using this pipeline tailored Machine Learning solutions can be created to target specific use cases. While comprehensive, this definition often neglects one fundamental component: where to run the pipeline.

For most applications, choosing the right hardware is a fine-tuning process of its own, and a complicated one as there are no general guidelines to follow.

Through our partnership with Intel, we have defined a set of best practices around the best hardware choice to maximize speed and cost for some of our most typical applications on Google Cloud Platform. Following our benchmark results on Intel Skylake, we then revisited two of our previous business use cases: training and batch inference performance on a Recommender System built for a Top-5 UK retailer, and online inference performance on the Product Recognition Computer Vision model built for Lush Cosmetics. Both solutions were developed in TensorFlow on various CPU and GPU offerings on GCP.

The benchmark

All benchmarks were run on Google Cloud Compute Engine on 8 vCPUs, using the Intel-optimised TensorFlow 1.14 release available in the Deep Learning Image catalogue. The benchmarks compare the same model on 5 different hardware offerings in Google Cloud Platform, with results averaged over 100 runs to compensate for variance introduced by multi-tenant usage on the cloud. The recorded metrics were cost and speed.

The hardware

N1 Instances – 1st Gen Intel Xeon Scalable Processors (code name Skylake)

The general-purpose n1 instances are based on first-generation Intel Xeon Scalable Processor codenamed Skylake. This hardware is ideal for tasks that require a moderate increase of vCPUs relative to memory.

N2 Instances -2nd Gen Intel Xeon Scalable Processors (code name Cascade Lake)

The general-purpose n2 instances are based on second-generation Intel Xeon Scalable Processor, codenamed Cascade Lake. GCP n2 instances benefit from higher clock frequency for better per-thread performance and memory-to-core ratio. These instances also benefit from Intel’s new DL Boost instructions, which improve int8 performance of inference workloads.

C2 Instances – Compute-Optimised with 2nd Gen Intel Xeon Scalable Processors (code name Cascade Lake)

The compute-optimised c2 instances are based on second-generation Intel Xeon Scalable Processor, codenamed Cascade Lake. GCP c2 instances offer the highest per core performance on Compute Engine, up to 3.8 GHz sustained all-core turbo and all the advantages of a fine-tunable architecture. These instances also benefit from Intel’s new DL Boost instructions, which improve int8 performance of inference workloads.

Nvidia GPU K80 & P100

First NVIDIA GPUs available on Google Cloud Platform.

Nvidia GPU V100

The Tesla V100 is the world’s most advanced dataset GPU for AI and HPC.

Nvidia GPU T4

The Tesla T4 is optimised for AI and Single Precision to obtain the best price and performance with minimum power consumption.

The hardware platform can be easily selected when creating a new VM from a drop-down list or via appropriate flags through the APIs. For more information about the machine types and region availability please refer to the official Google Cloud documentation.

Training and Batch Inference performance on the Top-5 UK retailer model

The solution built for the Top-5 UK retailer consists of a Wide and Deep model trained on millions of customers, thousands of products and billions of interactions. This is the same model used for the previous benchmark on Intel Skylake.

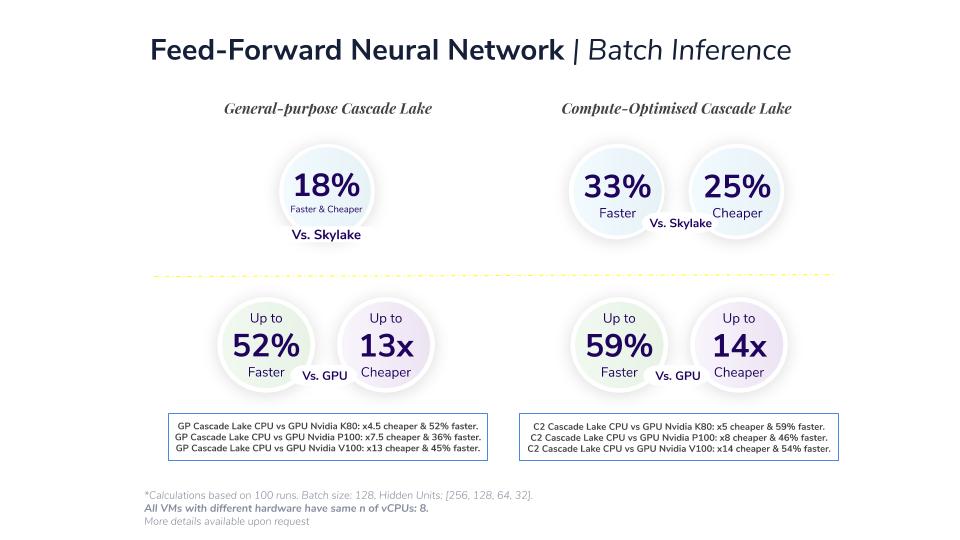

Benchmark results show that CPU is better than GPU for both training and batch inference for the Recommender System. Specifically, compute-optimised Cascade Lake is the best architecture;

even though it is slightly more expensive than the general-purpose Cascade Lake, it is 15% faster which makes it the best option for price as well.

Online Inference performance on the Lush model

The model built for Lush is a Computer Vision model embedded in the Lush Labs App as a new feature called Lush Lens, enabling customers to browse over products in-store using their phone camera as a recognition device in order to get product information on the app. The aim: to give customers better shopping experiences and reduce the business’ impact on the environment by removing unnecessary labelling and packaging. The solution uses Transfer Learning on an expanded MobileNet architecture, the most performant Convolutional Neural Network architecture for models to be deployed on mobile. CNNs are well known to behave better on a GPU for training, and as an extension for batch inference. This is why we focused our benchmark specifically on online inference, activated via TensorFlow Serving.

Benchmark results on online inference with the CNN show once again that Compute-Optimised Cascade Lake is the best architecture to run online inference for the Computer Vision model.

“We are pleased with the research carried out by Datatonic using the Product Classification Computer Vision model in our Lush Lens app as a benchmark. Our model runs on mobile at the moment, but we would consider moving to 2nd Gen Intel Xeon Scalable Processors in the future as the best hardware solution for new applications of our model on Google Cloud.” – Simon Ince, Tech Research & Development Lead, Lush Cosmetics

So, where do you run your Machine Learning solution?

All ML solutions are unique, and using different data or model parameters will often lead to deeply varying performance even when using the same model architecture.

Keeping this in mind, the chart below gives a picture of where you should run your ML solution.

Overall, GPUs are extremely powerful hardware for ML, but moving data from memory to the GPU itself can be too demanding a bottleneck. If the batch size is large, GPUs are most likely to perform better. However, it is typically best to keep a small batch size (you have probably seen 128 as the most typical batch size in all tutorials, it is also the batch size used for the benchmarks on training and batch inference) as this acts as regularization. From this premise, if speed is your goal then it follows that:

- Multi-layer perceptron architectures are best on CPU for both training and batch prediction. These are the typical architectures for recommender systems, propensity models, and most solutions involving tabular data.

- Convolutional Neural Networks work best on GPU for both training and batch prediction. This is the typical architecture for Computer Vision models.

- Online Inference is best on CPU for any architecture, as the batch size is just 1 and the I/O operation is too demanding on the GPU performance.

If cost is more important than speed, then CPUs may be your best solution in all cases. In fact, even though GPUs ran faster for CNNs and large batch sizes, the increase in speed may not be fast enough to make GPUs cost-effective given that they are up to 6 times more expensive than CPUs on Google Cloud Platform.

Compute-optimised Intel Cascade Lake has proven throughout our benchmarking to be the best CPU solution available in GCP and it is, therefore, our recommended hardware to perform R&D for all Machine Learning pipelines and productionization for the cases highlighted in the flow chart above.

Are you interested to share your R&D results with us or understand how your Machine Learning workflows can benefit from state-of-the-art deployment on Google Cloud Platform and Intel? Contact us or reach out to our team at hello@datatonic.com.